Authors: Julien Vanwambeke, Gaia-X, Functional Architect and Yassir Sellami, Back-end Software Developer

Introduction

In a previous article (Gaia-X and Contract), we explain how Gaia-X aims to deal with contracts, including a comparison between policies set up by the provider of a service and usage intentions declared by a consumer.

To do so, the chosen solution by Gaia-X is to transform everything into ODRL (Open Digital Rights Language) and to perform reasoning using the Gaia-X Policy Reasoning Engine.

The purpose of this article is to teach you how to swim using this engine, explaining how it works functionally and technically.

Functional Overview

The Policy Reasoning Engine would be an open-source library built by Gaia-X to perform reasoning.

It could be used in any scenario where policy reasoning is involved.

So far, in the Gaia-X ecosystem, we have in mind two use cases (to get more information, read Gaia-X and Contract article):

- Policy reasoning performed by a catalogue to filter the shortlist of eligible providers for a service.

- Policy reasoning performed by the selected provider during the contracting process.

In both cases, the Policy Reasoning Engine is working the same way.

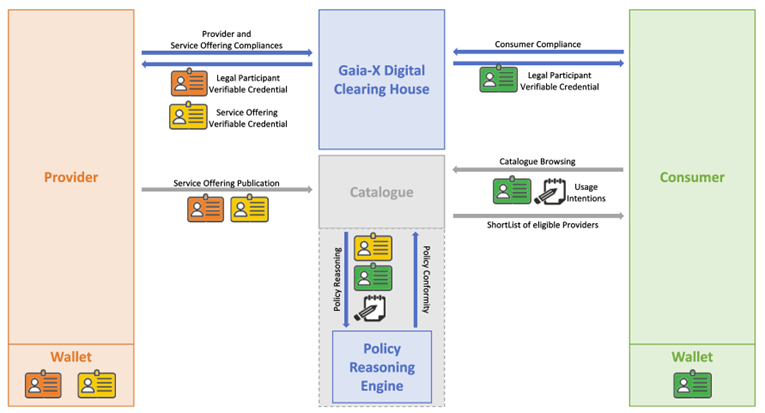

Let us have a look at one of the two use cases to go deeply into the explanations, and the policy reasoning done during the catalogue browsing:

- First, the provider has to select and use a Gaia-X Digital Clearing House to get its Legal Participant Compliant Verifiable Credential, and also declare his service offering including its policies to a Gaia-X Digital Clearing House to get the associated Service Offering Verifiable Credential.

- The Consumer also has to select and use a Gaia-X Digital Clearing House to get its Legal Participant Verifiable Credential. The Gaia-X Digital Clearing House selected by the Consumer does not have to be the same Gaia-X Digital Clearing House selected by the provider.

- The Provider is then publishing the Service Offering to a Catalogue using its Legal Participant Verifiable Credential and the Service Offering Verifiable Credential.

- When the Consumer is browsing a catalogue, it will send its Legal Participant Verifiable Credential and its usage intentions (in ODRL).

- The catalogue will first determine which services could be involved in the Customer request (it can be a composition of services).

- Then, the catalogue will build a shortlist of providers that can provide each requested service.

- Optionally, the catalogue can filter that shortlist using the Policy Reasoning Engine.

- The Catalogue will send in response to the Customer the shortlist of providers.

The Policy Reasoning Engine requires in order to perform the reasoning:

- The Service Offering Verifiable Credential, containing the policies set by the provider.

- The consumer information such as the location (from the Consumer Legal Participant Verifiable Credential for example).

- The Usage intentions sent by the consumer.

As you can see, a policy reasoning has to be done for each service of a provider.

And now, this is when the magic happens. So put your swimwear 🩳 and googles 🥽 on and let us dive into the technical overview.

Technical Overview

As it is often the case in Gaia-x, we try to reuse specifications and standards already in place to ensure interoperability and compatibility with existing specifications and software.

That is why, for the reasoning, we will be using:

- ODRL (Open Digital Rights Language), a W3C standard policy expression language.

- Verifiable Credentials, also a W3C standard dealing with cryptographically verifiable claims (as JSON-LD format for Gaia-x).

- JSON Path to be able to evaluate the credentials mentioned above.

- RDF to represent our policies as triples (subject, predicate, object).

- SPARQL to query the aforementioned triples.

Enough about scary words, let us dive into (pun intended) how all this works together.

Let us split this into small steps for easier understanding even though this could be almost transparent for a user browsing a catalogue.

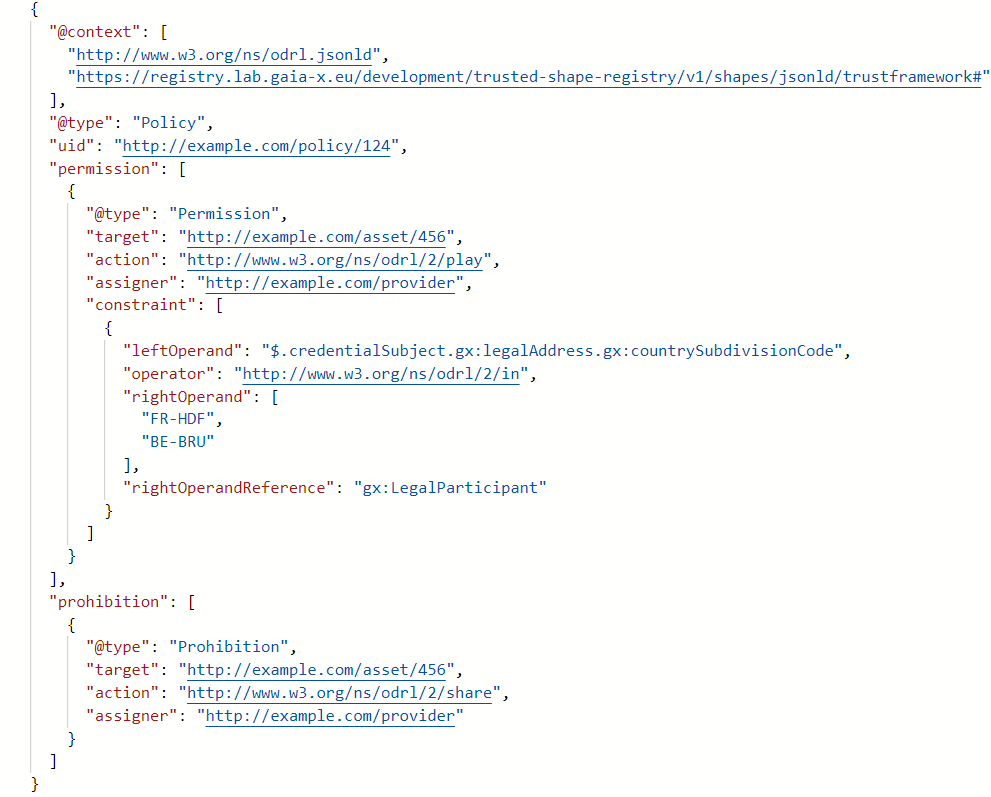

- First of all, the provider must define a policy for each service, this could be done by putting the policy directly into the “gx:policy” attribute of a Service Offering Verifiable Credential.

Before going into the next step, this policy is a standard ODRL Policy in a JSON-LD format, however, each Rule’s (Permission, Prohibition, Duty) constraint is in a specific format (check the example just below):- The “leftOperand” contains a JSONPath value indicating for which credential attribute the constraint is evaluated.

- The “operator” contains a defined ODRL Operator

- The “rightOperand” contains the actual values that the claims will be compared against

- The “rightOperandReference” refers to the credential type (not limited to Gaia-x credentials), which could be defined in the context.

- After that, the reasoning engine starts by transforming this policy into RDF triples and inserting that into a Graph Database.

- The next step is using the consumer’s credentials (usually from a wallet) to evaluate the JSON Path supplied in the first step in the constraints part.

- Also, we need to ask the consumer to convey their usage intentions, expressed as an ODRL request policy.

- Then, using inputs from steps 3 and 4, a SPARQL query is built to query the database mentioned in step 2, it is also worth noting that the Database already contains the ODRL ontology and possibly others which enable the ability to perform RDFS or OWL reasoning (for example, if I am allowed to use something then I am allowed to display it)

- Finally, if the query from step 5 returns any results, then an agreement can be reached between the consumer and the provider, otherwise either the consumer usage intentions are not allowed by the provider, or the content of the consumer’s credentials is not what the provider requires.

Here is an example of a provider’s policy wanting to allow “Play” on an asset “456” with a constraint on the LegalParticipant address and disallowing “Share” on the same asset:

Give it a try!

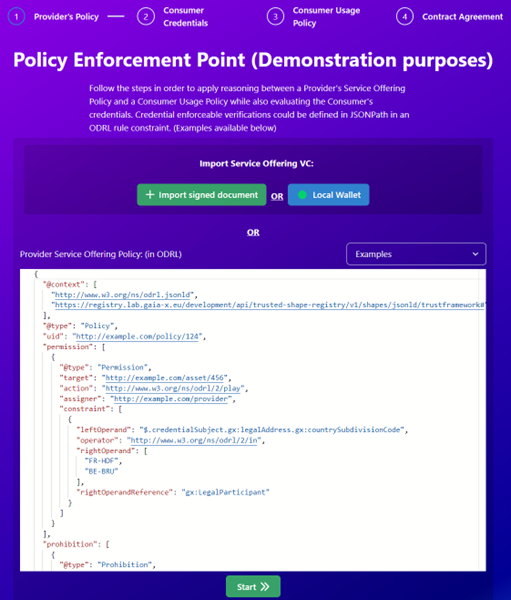

Now, to be the next Michael Phelps 🏊, you need to train! And to do so, the Gaia-X Lifeguard Lab Team 🧜 has built a Wizard where you can reproduce the functional steps using your own test credentials (legal participant and service offering) and usage intentions, or the given examples, to call the Policy Reasoning Engine and thus better understand how it works. The library is still a work in progress at the moment, but it will be available soon and with it, compatibility snippets to be used with several ecosystems.

Conclusion

The Policy Reasoning Engine is a Gaia-X library helping service providers or catalogue providers to go further into machine processing, parsing complex policies and usage intentions automatically to add value for the consumers.

To know more about how Gaia-X enables a data economy where everything is readable by both humans and machines, adding trust between actors, stay tuned!